Une part du travail graphique lorsque le site de Radio Panik a été refait, il y a bien longtemps, ça a été de lui donner une police de caractères dédiée, c’est Reglo, une création d’Open Source Publishing,

Reglo is a font so tough that you can seriously mistreat it. The font was designed by Sebastien Sanfilippo in autumn 2009 and is used for Radio Panik identity, among other.

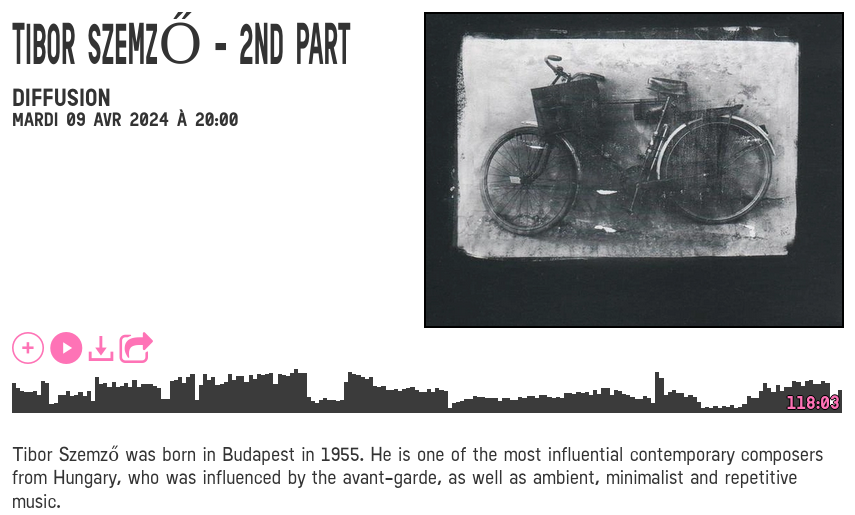

Avec le temps et l’utilisation du site web, il y a parfois eu besoin de dessiner de nouveaux caractères; typiquement ça arrive quand par hasard je tombe sur une page avec un titre comme ici :

Ça se voit vraiment au niveau du titre parce que les dimensions sont très différentes, mais dans le corps du texte aussi il y a substitution, et le ő manquant est remplacé par le ő de la police par défaut.

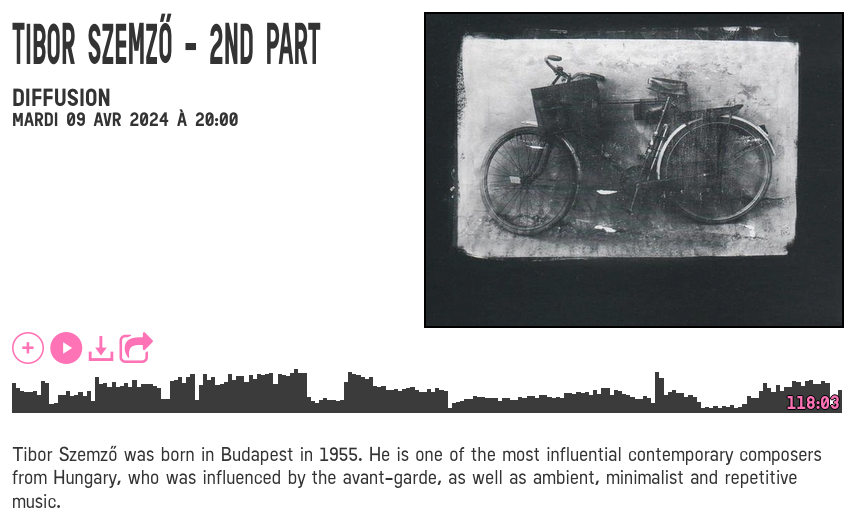

On n’a pas développé la relation de demander l’ajout de ces caractères absents quand on les rencontrait, plutôt jai pris l'habitude de les dessiner moi-même, et dessiner est un bien grand mot quand il s’agit ici de prendre un o existant et de lui coller deux accents qui existent également déjà (« a font so tough that you can seriously mistreat it ») . Et pour un travail somme toute minime, le rendu sur le site est bien meilleur :

Bref j’ai un peu comme ça appris à sommairement utiliser FontForge, sans prétention.

Il y a quelques mois je suis tombé en librairie sur « La typographie post-binaire » de Camille Circlude :

Comment dépasser la binarité de genre caractéristique de la langue française ? Cet ouvrage recontextualise l’apparition de l’écriture inclusive, questionne ses usages et recense les multiples alternatives possibles apparues depuis de nombreuses années. (…) Le dessin de caractères typographiques inclusifs, non-binaires et post-binaires offre aujourd’hui la possibilité de matérialiser les existences queer, non-binaire, genderfluid, agenre et genderfucker dans ces espaces partagés et symboliques que sont la langue et l’écriture.

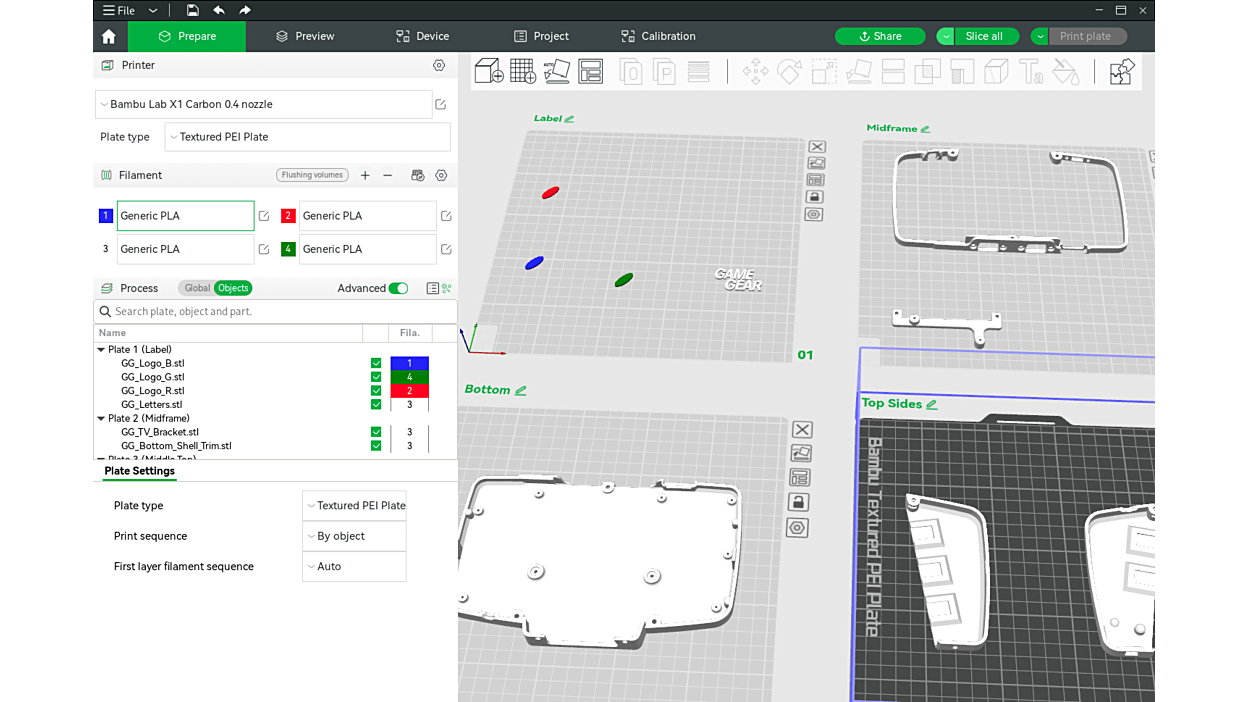

Dans le livre il y a de l’histoire, de l’analyse, des idées, il y a aussi un peu de technique et ça passe particulièrement par læ Queer Unicode Initiative (QUNI) , qui a travaillé à standardiser l’utilisation de zones réservées de l’Unicode pour la définition de nouvelles ligatures et glyphes. Ça m’a bien sûr donné des envies d’expérimenter avec Reglo, voir ce qui pouvait être fait.

Le site de la radio n’est pas plein d'occasions mais il y a cette phrase, sur la page de présentation : « Si vous êtes curieux·se, ouvert·e à l’autre, créatif·ve, engagé·e, rêveur·euse… », dont les mots pourraient donner :

Dans l’élan il y en a quelques autres encore,

Dans læ QUNI il y a aussi le nécessaire pour une transposition automatique, c’est-à-dire que é·e dans le texte donne automatiquement la ligature appropriée (c’est assez simple, exprimé ainsi : sub \eacute \periodcentered \e by \eacute_e;) mais je n’ai pas encore intégré ça, pour le moment rien n’est donc visible sur le site de la radio, ça ne reste qu’une expérimentation locale.

exemple de Debian et Ubuntu

exemple de Debian et Ubuntu